Embedded NTP Server

Now that I got the results I wanted from my embedded NTP client, I wanted to convert it to an NTP server. I also wanted it to support interleaved NTP mode, so it could have hardware timestamps for everything.

Basic NTP server

First, I wanted to get a basic NTP server working. I already had most of the code for this already. From previous measurements, I knew the delay between submitting the packet to LwIP and it transmitting was between 12.8us and 16.1us. I chose the smallest value and adjusted the transmitted timestamp by that amount. I also added RX and TX adjustments according to the PHY's datasheet.

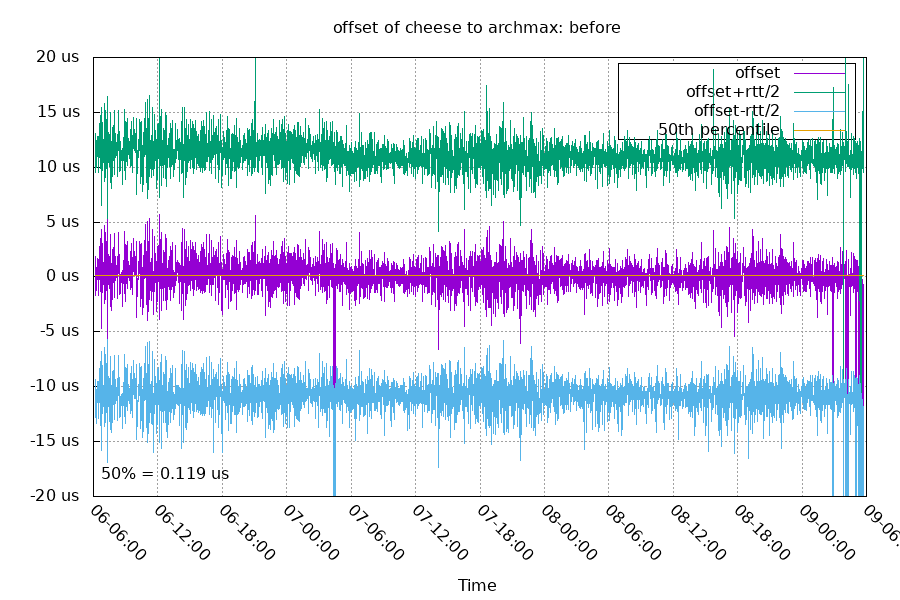

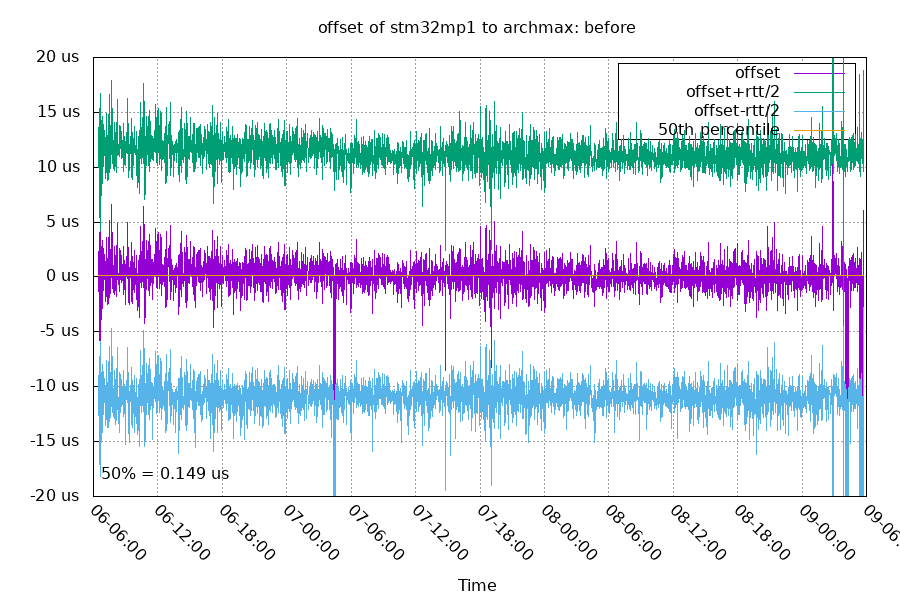

As usual, I measured it from my other stratum 1 NTP servers with hardware timestamps enabled.

I restarted the archmax NTP server a few times during this time period, to adjust the software. On day 7 at around 05:00, I added a compensation to the RX timestamp to lower the offset by 375ns.

This is a decent result. The difference between the mean RTT and the max RTT is 3.8us and the RTT stddev is 408ns.

Interleaved NTP

Basic NTP can give these clients hardware timestamps on the three of the four measurements. But if I want hardware timestamps for all four, I need Interleaved NTP.

I implemented the server in a simple fashion. It stores the last 100 timestamps, one per source IP. This is enough for my needs, but this hardware has enough memory for tracking timestamps for around 7000 different clients.

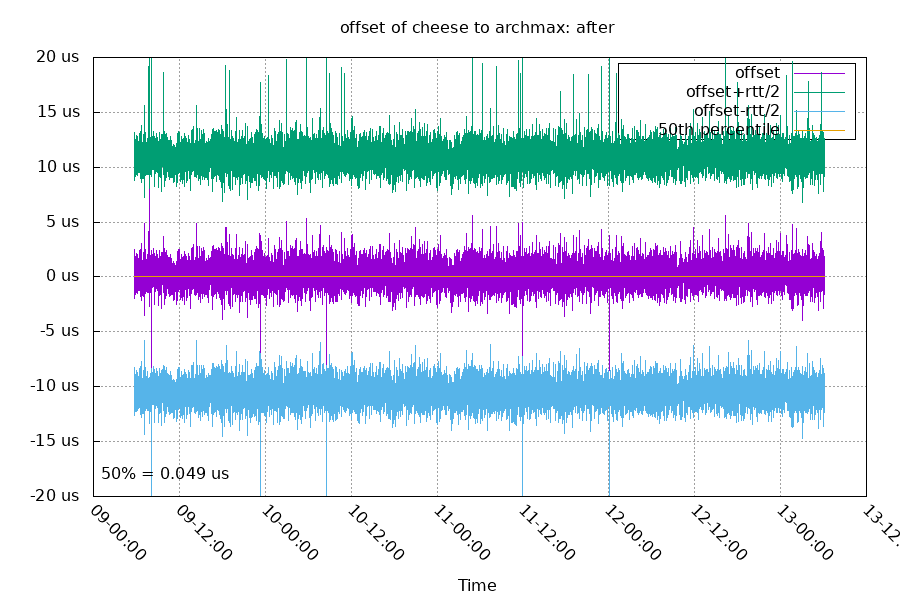

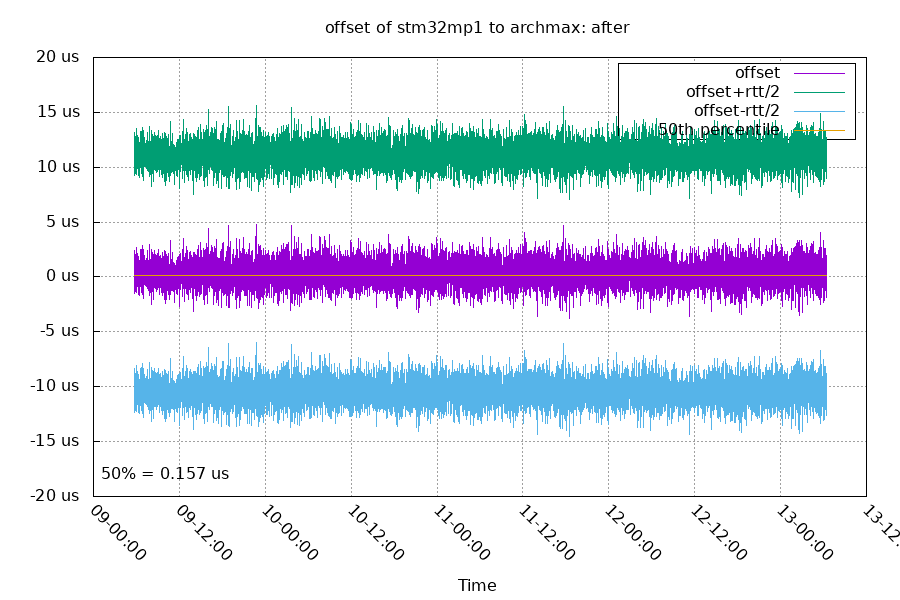

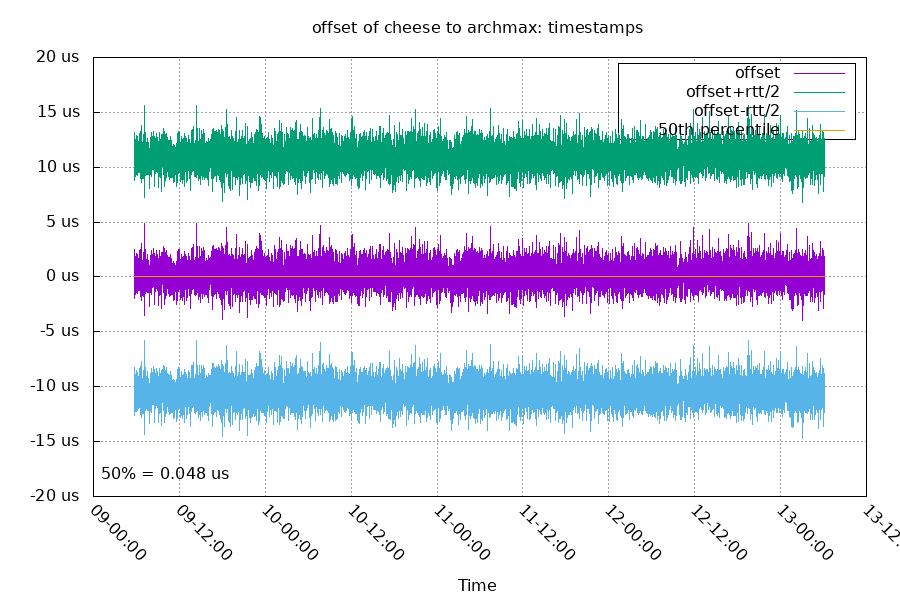

The graphs below are after I switched the other NTP servers to using interleaved mode with the archmax NTP server.

The stm32mp1 has much less RTT based noise than the APU2. Looking closer into it, 34 of the 21,653 samples (0.2%) on the APU2 used kernel timestamps rather than hardware on the APU2's side. Excluding those samples gives a better graph.

After switching to interleaved mode, the difference between mean RTT and max RTT went from 3.7us to 0.2us. That reduces the amount of jitter introduced by the network. The stddev of RTT values also went from 408ns to 86ns.

Long term median offsets were all within 200ns. So that looks pretty good. The noise in offsets are at least partially caused by the local crystal oscillator moving so much.

Code

Code is on github

Next plans

I've ordered a Teensy 4.1 and the needed parts for ethernet. I want to port this code over to the Teensy platform. The MCU on the Teensy has PPS input directly on the NIC's 1588 hardware timer, which should make clock sync easier. It also has more memory and a faster CPU. The 1588 hardware timer is only 32 bits, so another clock in the system will need to track seconds. There's a few other hardware differences that I'll need to account for. The IP stacks on the Teensy are still experimental, so I'll probably need to do extra work getting everything running.