Embedded NTP client/NTP interleaved mode, part 5

I'll test what happens when I plug my embedded NTP client directly into my NTP servers, bypassing the Ethernet switch. This ends my long running embedded NTP client series.

The systems involved

Embedded NTP client (Archmax): Part 4, Part 3, Part 2, Part 1

NTP server APU2: Part 1

NTP server STM32MP1: Part 3, Part 2, Part 1

APU2

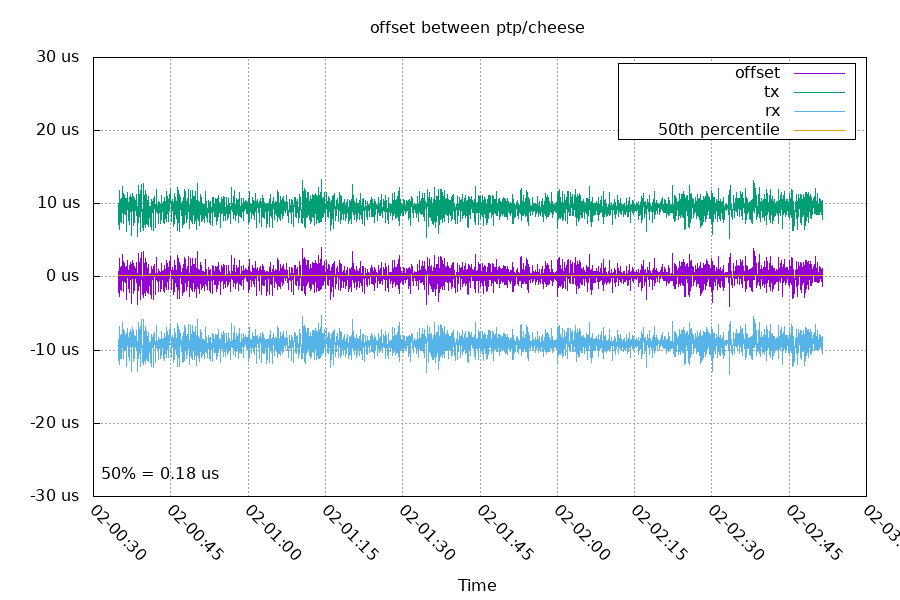

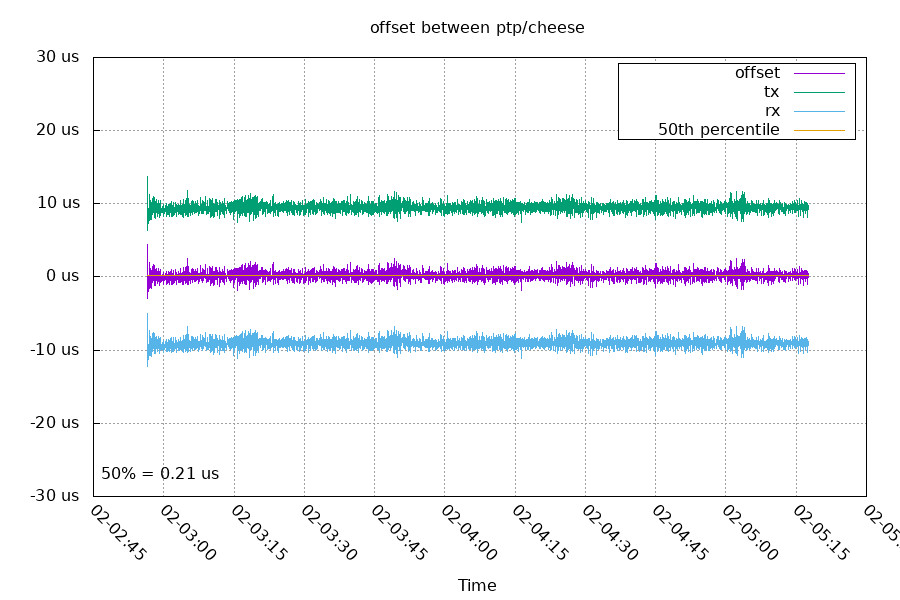

First, I connect the Archmax directly to the secondary port on the APU2. I wanted to see if there was any difference between the primary and secondary ports.

This combination resulted in a 180ns offset and 18.5us RTT. The ideal RTT is 15.0us, so there must be some extra delay in the system. Removing the Ethernet switch removed 3.7us (it was 22.2us RTT).

210ns offset and an RTT of 18.5us. Close enough to be well within the margin of error.

STM32MP1

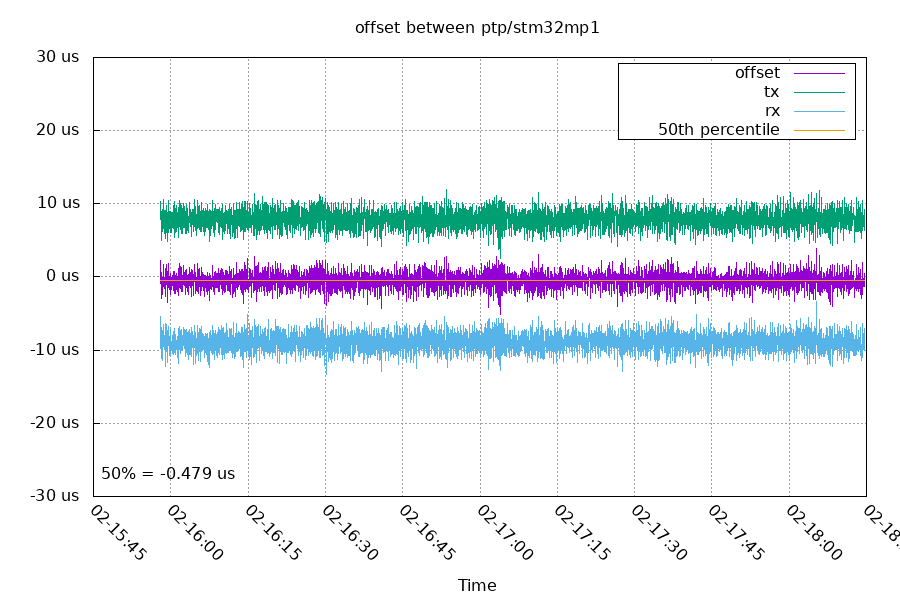

Next, I connect the archmax directly to the STM32MP1 board.

This resulted in an offset of -479ns and a RTT of 16.7us. Removing the ethernet switch removed 5.4us (it was 22.1us RTT). But the offset with the switch and without the switch stayed pretty similar (-479ns vs -430ns).

This NTP server having a small offset change compared to the APU2's is probably a result of its Intel NIC driver. Below is a snippet of the NIC driver code.

The Intel driver adjusts for latency in the RX and TX paths. It has different values for the different link speeds. The latency adjust is added to the TX timestamps and subtracted from the RX timestamps. Both modifications result in a lower RTT, but the asymmetry changes the offset. It's interesting the Intel NIC ended up having a higher RTT even with the -3237ns adjustment at 100M.

STM32MP1/APU2

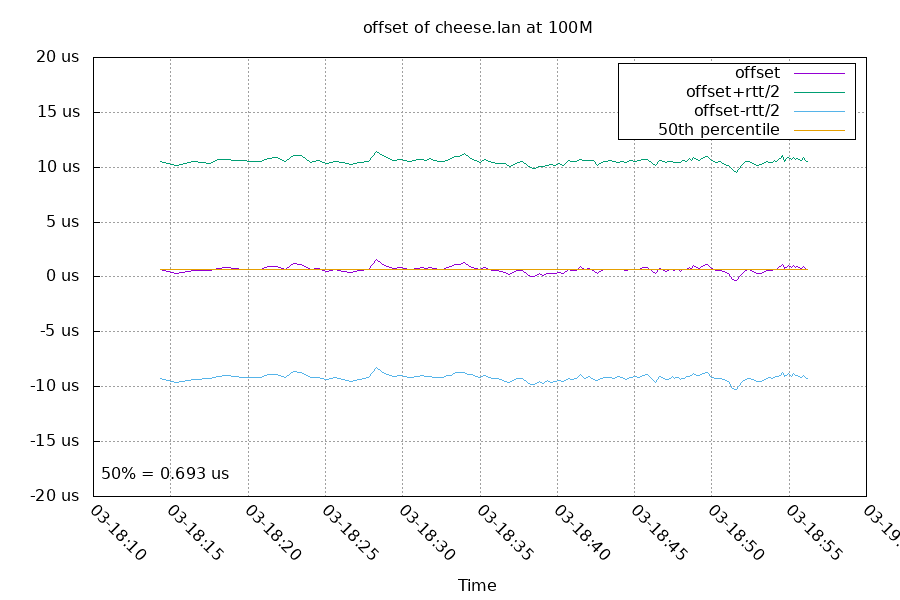

Lastly, I connected the two NTP servers directly together. If everything is consistent, the two NTP servers should have an offset of 479ns+180ns = 659ns at 100M.

The 693ns result is close enough to 659ns to be consistent with all the other results, and there's a RTT of 19.7us.

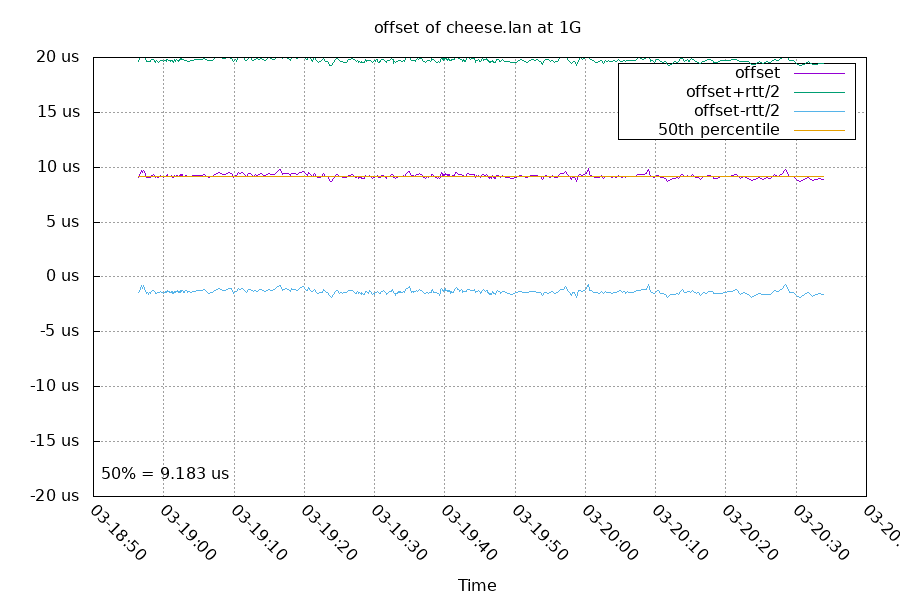

Because the offset is so low at 1G, this offset looks like something specific to 100M mode. This could be in the PHY (such as buffering RX samples for filtering).

100M vs 1G

I was hoping I'd be able to compare a 100M direct connection vs a 1G direct connection, but I got weird results.

Ideally the RTT should be 1.5us for this test. The NTP response direction is at -1.4us (the blue line), which would be reasonable for a 3us RTT. But the request is being delayed over 19us (the green line almost off the graph). The total RTT was 21us, which is oddly higher than the 100M RTT! I suspect energy efficient ethernet, but using ethtool to disable it on both sides did not affect the RTT or offset. I also tried disabling pause frames, but that didn't change the results either.

Summary

Which one of these clocks is most correct? To properly tell, I would need to measure the actual ethernet signal itself against the timestamps. Because I don't have the equipment to do that, I'll pick one of the two clocks closest to to each other and call it "correct" instead (with a +/- 500ns margin of error). To eliminate any error when using this NTP server, you would want to take a NTP client and have its NIC output a PPS to compare against the NTP server's PPS. That way, you can have confidence the NIC's time doesn't have a hidden offset.