Binary Clock, Update 3

I've made some progress on my binary clock project

Here's a video of it in its new case: https://www.youtube.com/watch?v=VnHRYwOf2ZQ

I've been tweaking the clock controller code and experimenting with different setups to see how the code reacts.

First Test

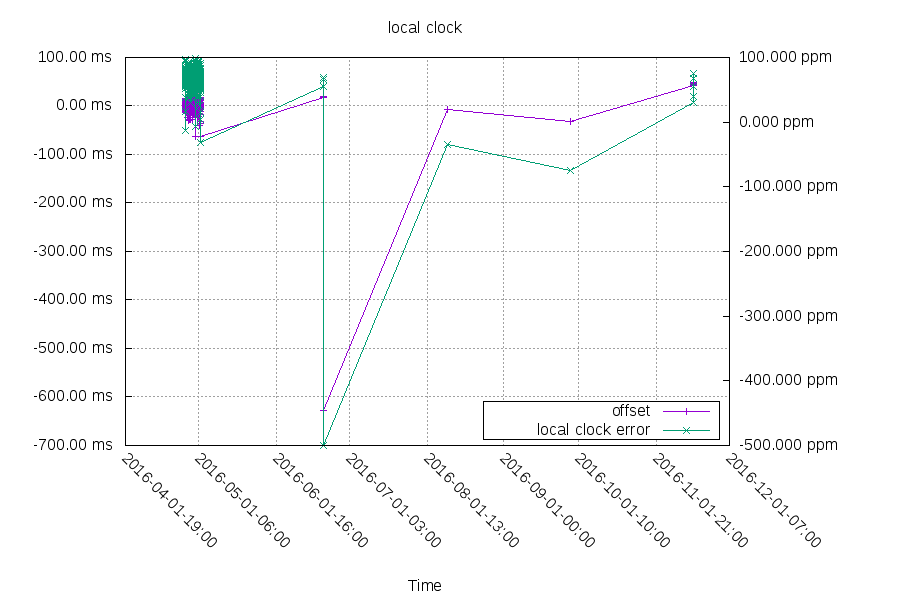

For this test, I just let it run:

This test ran for almost 6 days. Unfortunatly, it hit a bug that caused the clock to jump by 2^32 milliseconds multiple times. The accidently adding 2^32 ms to the clock bug is fixed now. The offset isn't way off because it is stored as milliseconds in a 32 bit signed integer. This is a second bug which is hit if the original clock setting is off by months. I'm just going to work around the second bug by rebooting the esp8266 for now.

Second Test

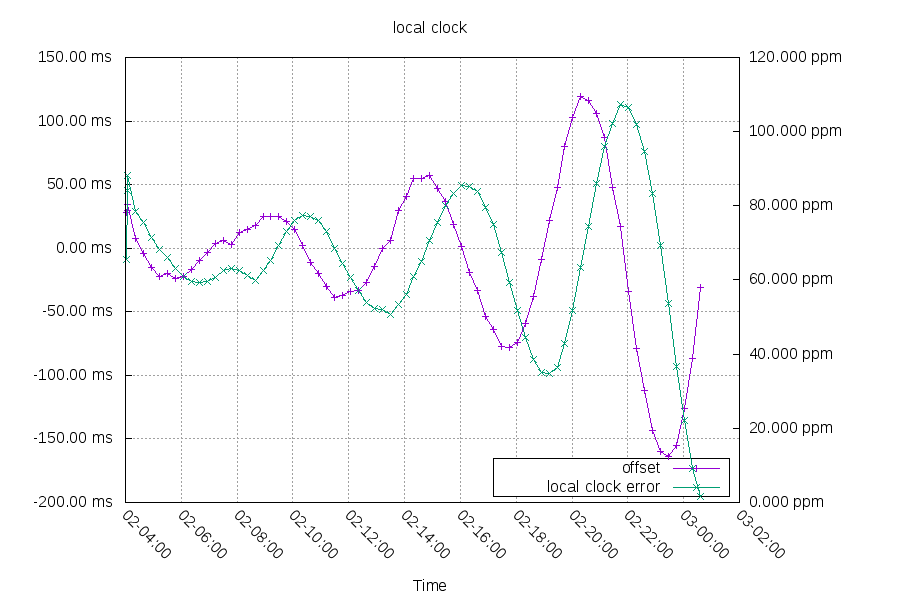

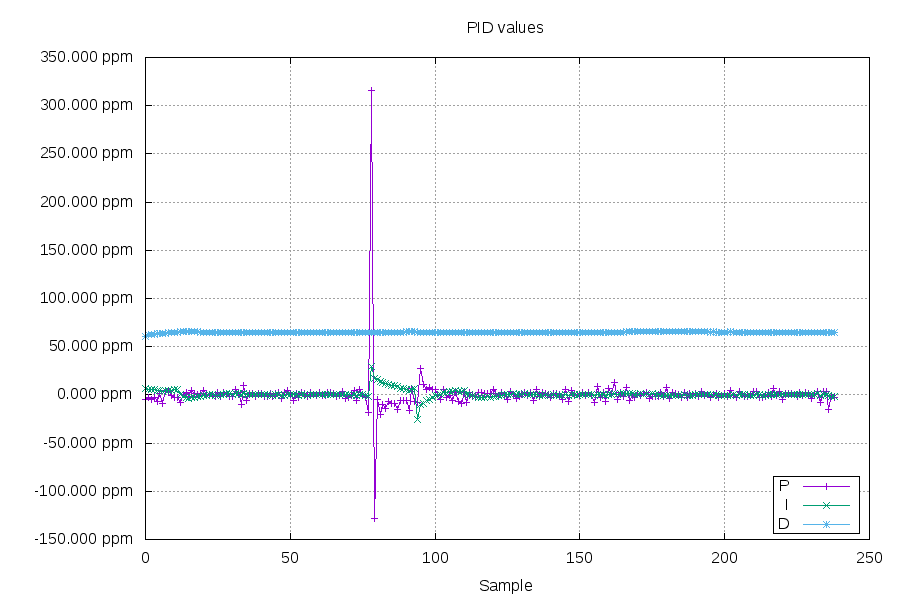

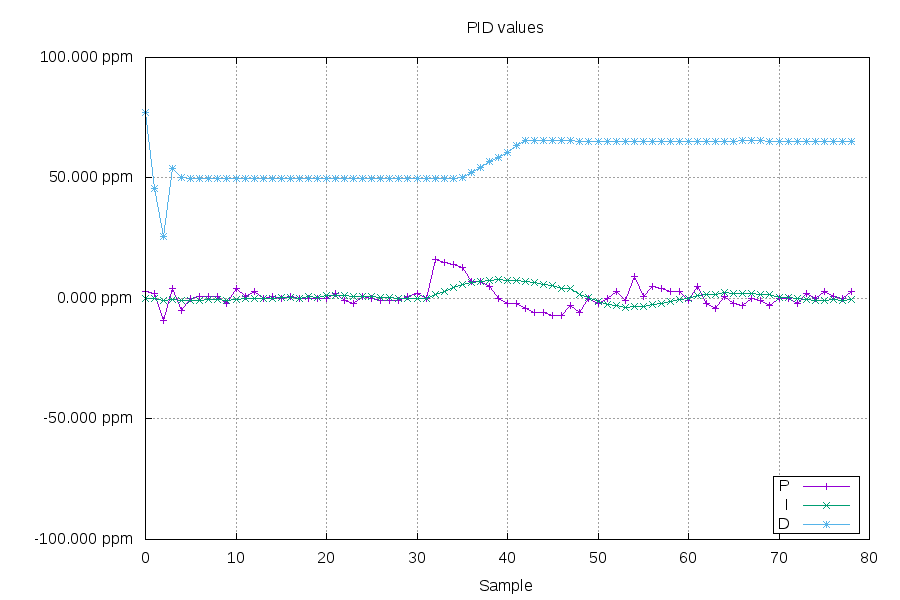

Next, I lowered the proportional constant in the PID controller, to see if it would result in better time.

This lead to more instability. You can see the I term is now the one pushing the clock out of balance, and the P term's delayed reaction is adding to the problem:

Third Test

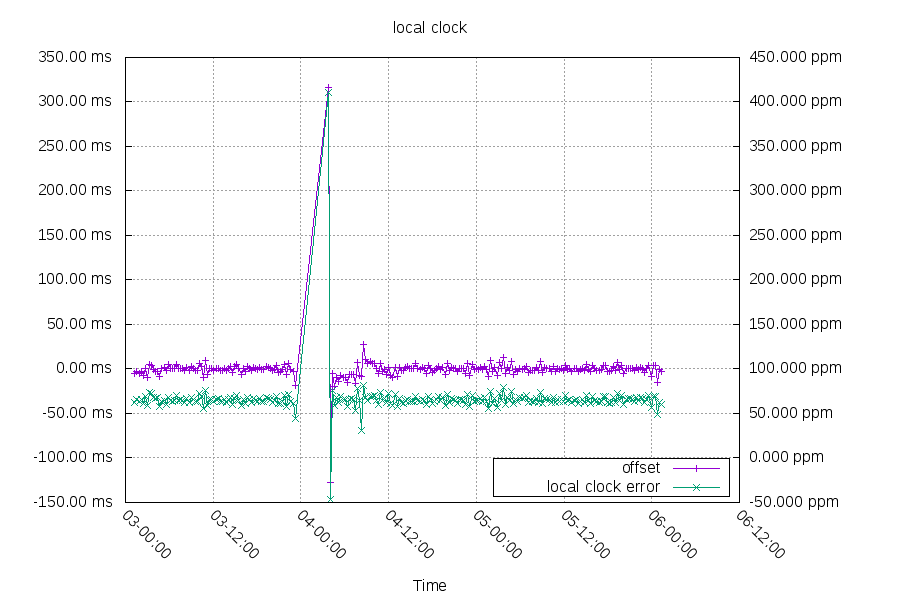

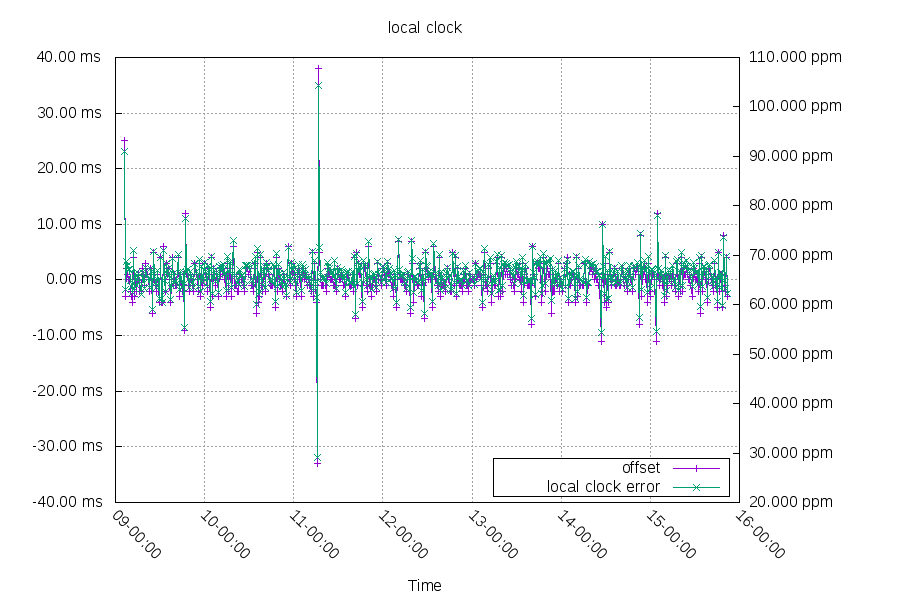

I reset the PID constants back to their original values and let it run again:

Between May 3, 23:12 and May 4, 03:45 my esp8266 was unable to reach its NTP server. This left the clock drifting at the previous rate.

The sample before the packet loss left the P term set to 18 ppm slow:

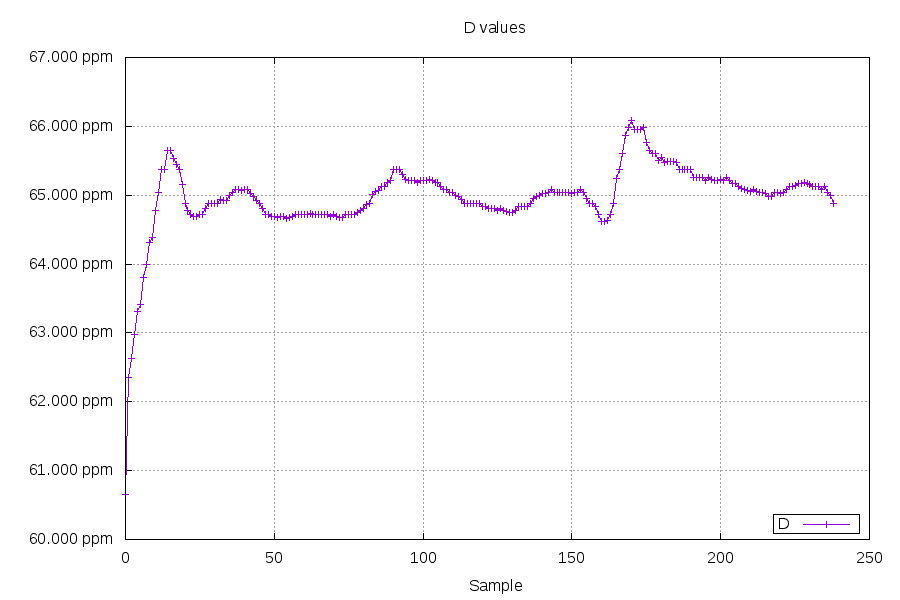

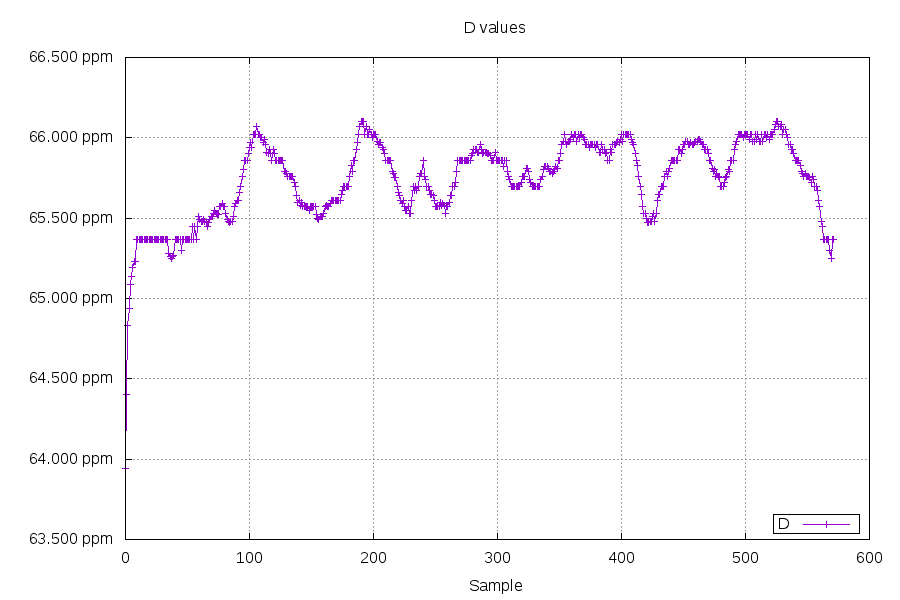

When the source came back 16,399,248 milliseconds later, the clock was 316ms slow. This is an error of 20.366ppm slow. If the clock had fallen back to just the D term on packetloss, it would have only been ~2ppm slow (around 32ms). This is because the P and I terms are unknown values while the D term is very stable (see below). I've adjusted the code to fall back to just the D value on packet loss.

I am happy about how quickly the clock got back into sync after the packetloss. You can see there's some P+I offsets that quickly settle out.

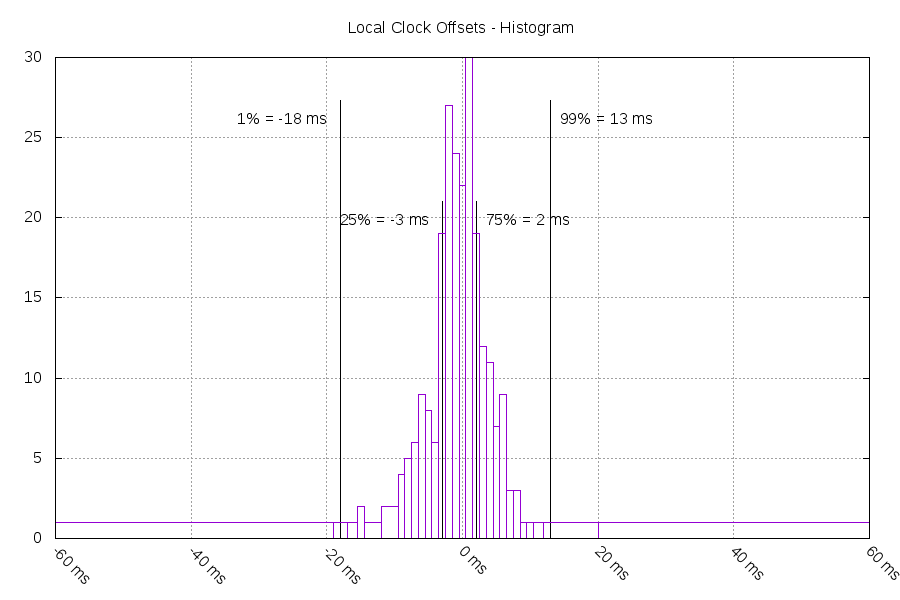

The end result of offsets for this run:

98% of the time, it was within +/-18ms.

Fourth Test

Next, I tried using a NTP server on the local network.

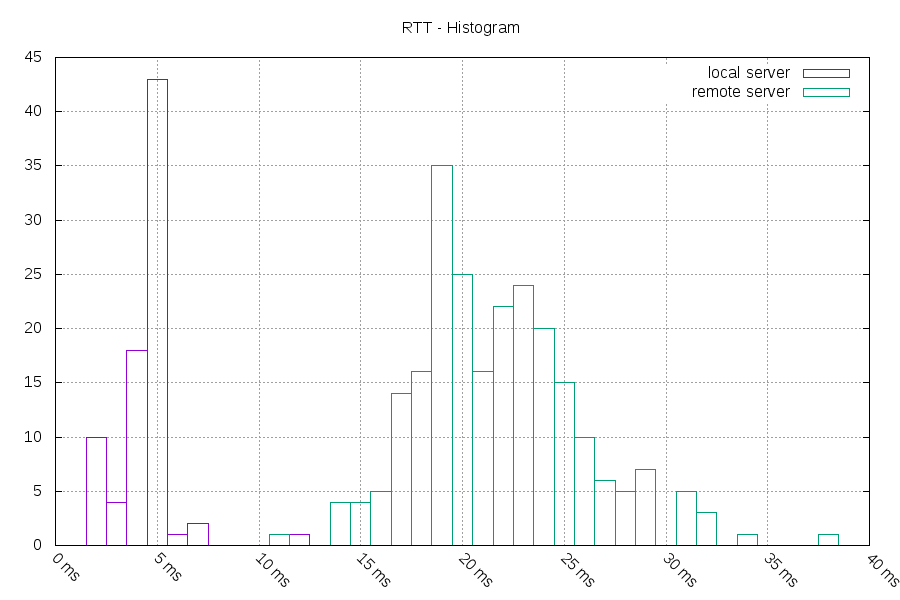

Comparing the round trip time to the two different NTP servers looked like this:

The vast majority (80%) of the 239 round trip times to my remote NTP server were between 17ms and 27ms.

With the local server, 80% of the 79 round trip times were between 2ms and 5ms. This is 2ghz wifi, so that's not completely surprising.

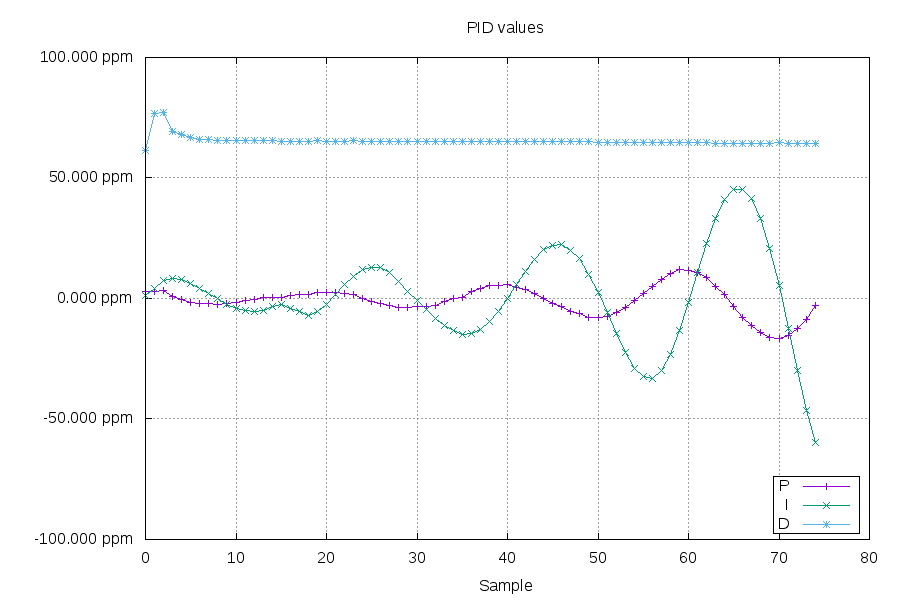

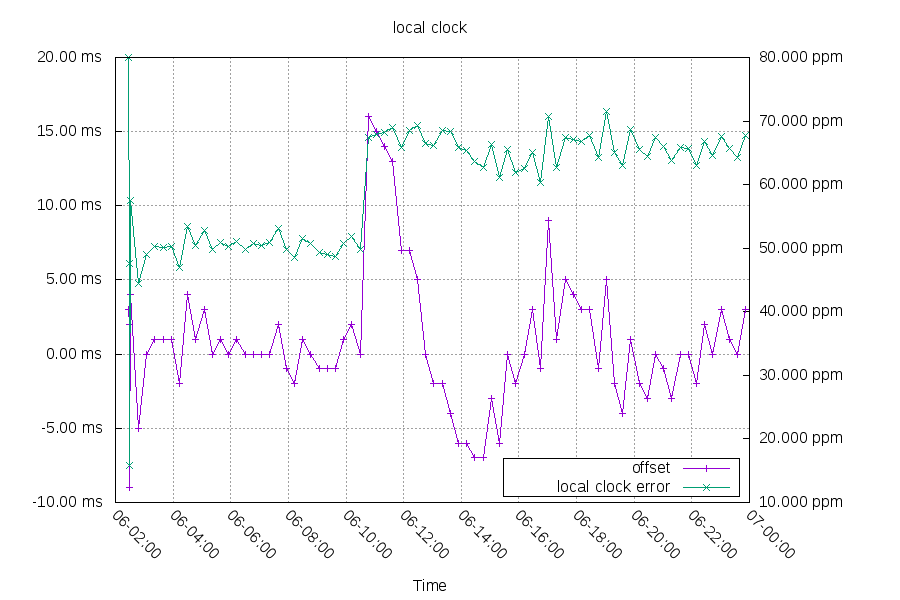

The local clock error rate jumped at 06-10:46.

You can see first the offset driving P at sample 32, then I slowly catching up, and last D finds the new rate while P and I settle back down.

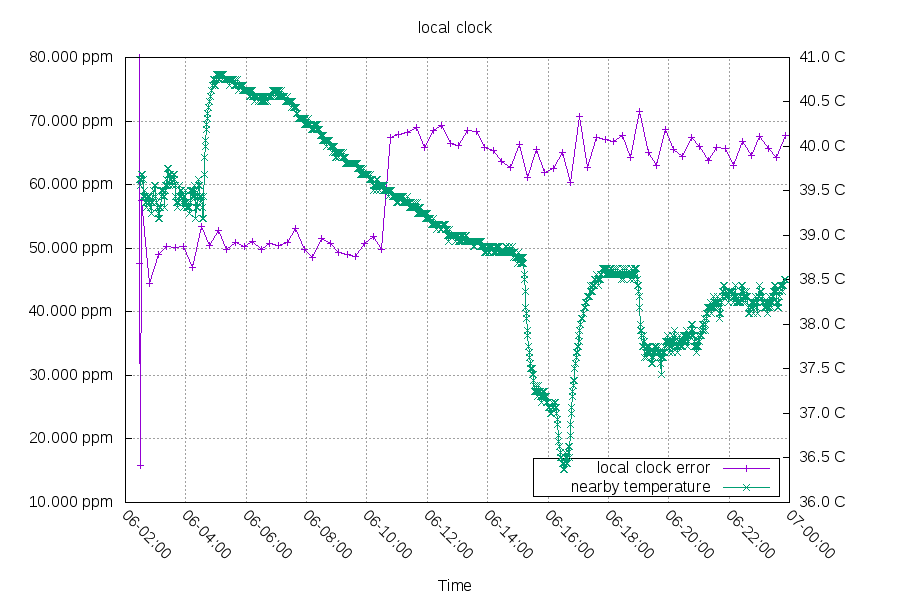

Comparing the local clock error to a nearby raspberry PI's MCP9808 temperature probe:

Maybe a rapid temperature change wasn't the source of this? Odd!

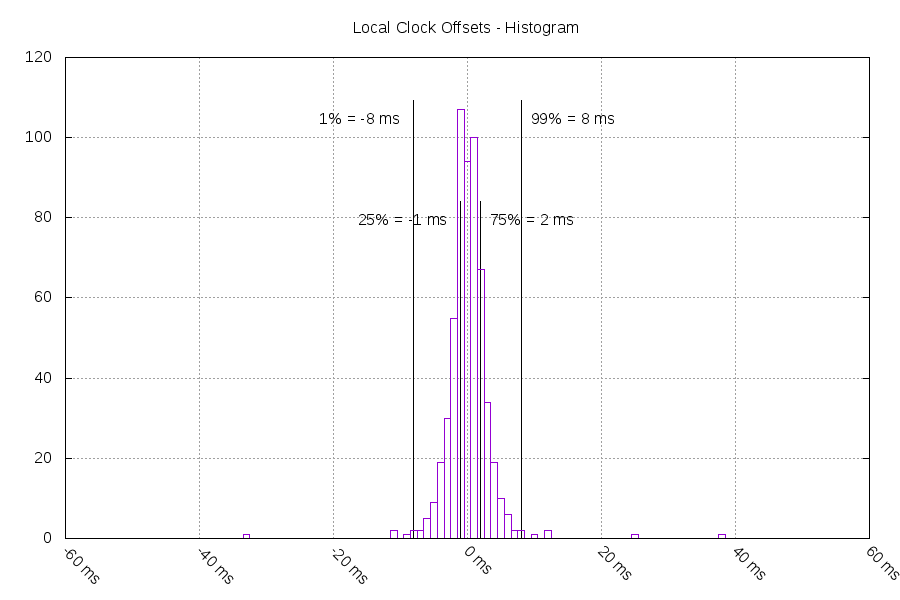

Fifth Test

The fourth test was interrupted by needing to mess with my data logging computer's storage setup. I wanted to let it run for a week and see how that did.

The local clock's offset frequently jumps between -1 ms and +2 ms. This is probably due to three factors: network jitter of ~3ms, limited clock rate control (my control steps are around 5ppm wide at 66ppm), and other measurement/rounding errors.

Summary

I've reached my original goal of a local clock within +/-10 ms. I've tested it in a few different configurations and I'm feeling pretty confident about its performance. Now to focus on more features!

See also: Part 2, Part 1, Source Code